Amazon EKS runs the Kubernetes management infrastructure across multiple AWS Availability Zones, automatically detects and replaces unhealthy control plane nodes, and provides on-demand upgrades and patching when required.

You simply provision worker nodes and connect them to the provided Amazon EKS endpoint.

The architecture includes the following resources:

EKS Cluster - AWS managed Kubernetes control plane node + EC2-based worker nodes.

AutoScaling Group

Associated VPC, Internet Gateway, Security Groups, and Subnets

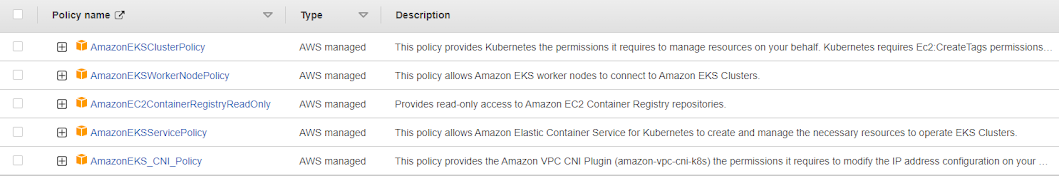

Associated IAM Roles and Policies

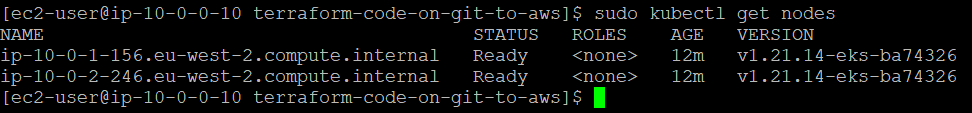

Once the cluster setup is complete install kubectl in your bastion host to control your EKS cluster.

# Install kubectl $ curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.23.6/bin/linux/amd64/kubectl

$ chmod +x kubectl && sudo mv kubectl /usr/bin/kubectl

In this article, I will describe how my code containing Jenkinsfile & Terraform-code is housed on a local machine from which my code will be pushed to my git repository.

I then created a Jenkins pipeline job to pull the code from git and deploy it on AWS. Upon successful execution of the Terraform code, it will deploy an Amazon-managed EKS cluster with two worker nodes of Amazon EC2. (1 on-demand instance of the t2.micro size and 1 spot instance of the t2.medium size)

https://github.com/punitporwal07/git-jenkins-tf-aws/blob/main/eks-by-tf-on-aws.tf

# Pushing code from local to github repository$ git init$ git add . $ git commit -m "sending Jenkinsfile & terraform code" $ git push origin master

If you are using a bastion host to test this journey make sure you have AWS profile set for the user which has all the required role attached to it.

my profile for this job looks like the below -

# cat ~/.aws/credentials [dev]aws_access_key_id = AKIAUJSAMPLEWNHXOU aws_secret_access_key = EIAjod83jeE8fzhx1samplerjrzj5NrGuNUT6 region = eu-west-2

Policies attached to my user to provision this EKS cluster -

Plus an inline policy for EKS-administrator access

{ "Version": "2012-10-17", "Statement": [ { "Sid": "eksadministrator", "Effect": "Allow", "Action": "eks:*", "Resource": "*" } ] }

Now you have all the required things in place to run the pipeline job from Jenkins which will pick the code from GitHub and deploy an aws-managed EKS cluster on AWS provisioned by Terraform code.

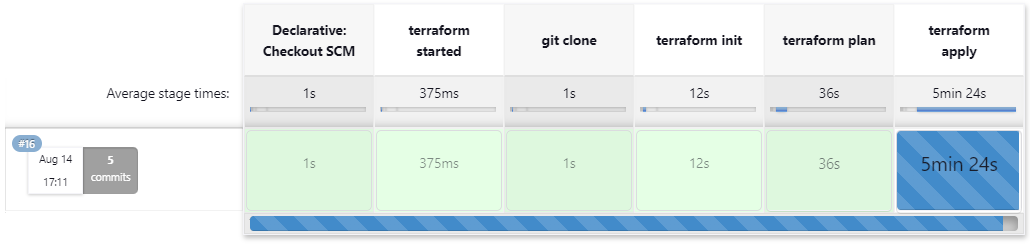

A successful job will look like the below upon completion.

Finally, the EKS cluster is created now, which can be verified from the AWS console

Accessing your cluster

# export necessary varibles as below & set cluster context $ export KUBECONFIG=$LOCATIONofKUBECONFIG/kubeconfig_myEKSCluster$ export AWS_DEFAULT_REGION=eu-west-2 $ export AWS_DEFAULT_PROFILE=dev$ aws eks update-kubeconfig --name myEKSCluster --region=eu-west-2Added new context arn:aws:eks:eu-west-2:295XXXX62576:cluster/myEKSCluster to $PWD/kubeconfig_myEKSCluster

which can be fixed by updating the Roles and Users of aws-auth configmap of your EKS-cluster

# from your bastion host $ kubectl edit configmap aws-auth -n kube-system

apiVersion: v1

data:

mapAccounts: |

[]

mapRoles: |

- rolearn: arn:aws:iam::2951XXXX2576:role/TF-EKS-Cluster-Role

username: punit

groups:

- system:masters

- system:bootstrappers

- system:nodes

mapUsers: |

- userarn: arn:aws:iam::2951XXXX2576:user/punit

username: punit

groups:

- system:masters

Known Issues -

Pipeline Syntax > Sample Step as git:Git > give your repository details > Generate Pipeline Script

git branch: 'main', credentialsId: 'fbd18e1b-sample-43cd-805b-16e480a8c273', url: 'https://github.com/punitporwal07/git-jenkins-tf-aws-destroy.git'

No comments:

Post a Comment